No one knows how the most powerful name in news really distributes the news.

That's why this week's allegations about liberal bias on Facebook are resonating even among people who don't believe the anonymous sources making the allegations.

On Tuesday a top Republican in Washington, Senator John Thune, demanded answers from Facebook CEO Mark Zuckerberg.

"Facebook has enormous influence on users' perceptions of current events, including political perspectives," he wrote.

And yet the company's actions are often shrouded in mystery.

"The facts seem to be unclear on what Facebook does and doesn't do," digital media executive Jason Kint said. "Black boxes and algorithms" — like Facebook's famous news feed algorithm — "invite concern without years of reputation and trust."

Facebook's power has also stoked fear and envy among many publishers. For many mobile users, Facebook IS the Internet; instead of seeking out news web sites, they click the links that show up in the personalized Facebook news feed.

Related: Senator demands answers from Facebook

Facebook has a unique ability to turn on a firehose of traffic — and the ability to turn it off. Publishers may not live or die by Facebook alone, but they certainly thrive or struggle based on the company's decisions.

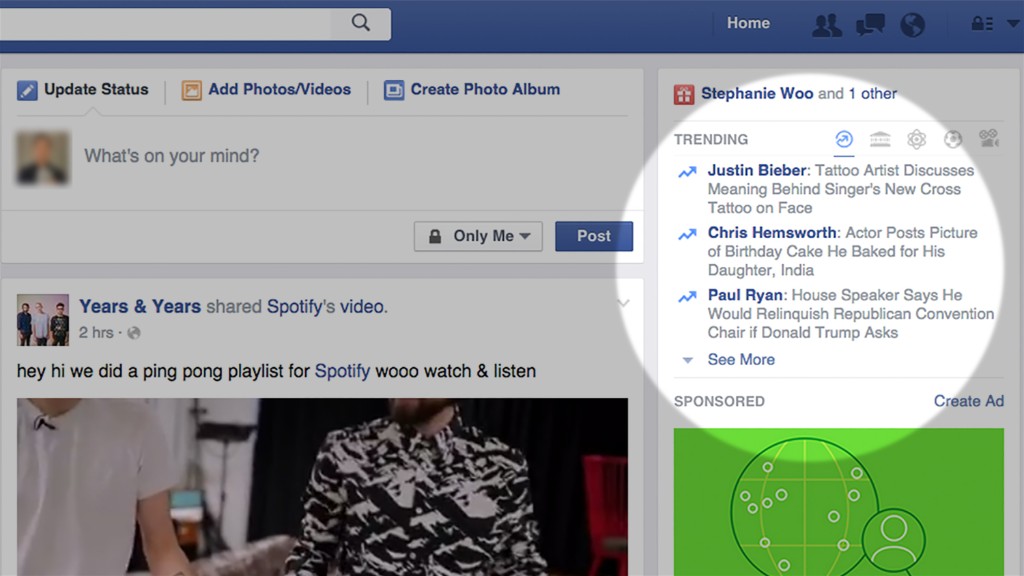

So Gizmodo's recent reports about the production of Facebook's 'trending" stories have gained a ton of attention. Journalists, academics and some average users want to understand how and why Facebook does what it does.

Related: Did Facebook suppress conservative news?

"As the No. 1 driver of audience to news sites, Facebook has become the biggest force in the marketplace of ideas. With that influence comes a significant responsibility," Poynter ethicist Kelly McBride wrote.

That's why McBride, a former ombudsman for ESPN, offered what she called a "crazy idea" in a Poynter blog post on Monday: "What if Facebook (and other companies that have clear ability to influence the marketplace of ideas) had a public editor, like The New York Times does. That person would be able to research and write about the company from the public's point of view, answering questions and explaining the values that drive certain decisions."

On Monday Gizmodo cited anonymous former contractors who said colleagues sometimes suppressed news about conservatives and links to right-leaning web sites.

Related: Blocked in China, Facebook still wins

Other anonymous former Facebook workers disputed the account. And a Facebook spokesman said Tuesday that "after an initial review, no evidence has been found that these allegations are true."

To be clear, there is no concrete evidence of systemic bias at Facebook. The "trending" box regularly includes news about conservative news sources.

But it is possible that some individual workers may have rejected specific stories. Monday's report advanced a long-held view among some prominent conservatives that tech giants like Facebook are stacking the deck against them.

Some liberals, in turn, said conservatives were just seizing on another reason to claim victimhood status.

Set that aside for a moment. How does Facebook decide what users see? Should human editors be involved?

"That this is such a big topic today is reflective of Facebook's massive gap in trust as a source of news," said Kint, the CEO of Digital Content Next, a trade group that represents publishers like the AP, Bloomberg, Vox, and CNN's parent Turner.

Related: Facebook's Sheryl Sandberg honors single moms

A Facebook spokesman said the company has "worked to be up front about how Trending and News Feed work."

But outsiders who study Facebook say there's a lot they don't know. "The big problem isn't that a couple of human editors fiddled with the Trending Topics," Fortune's Mathew Ingram wrote Monday. "It's that human beings are making editorial decisions all the time via the social network's news-feed algorithm, and the impact of those decisions can be hugely far-reaching — and yet the process through which those decisions are made is completely opaque."

Facebook frequently runs experiments to change and improve the news feed. Sometimes users see more news stories from publishers, sometimes they see fewer such stories.

Related: Facebook needs more 'human bias'

The "trending" box is produced partly by algorithms and partly by workers called "news curators." In the wake of Monday's Gizmodo report, Facebook said "popular topics are first surfaced by an algorithm, then audited by review team members to confirm that the topics are in fact trending news in the real world and not, for example, similar-sounding topics or misnomers."

The curators weed out hoaxes, spammy stories and other objectionable content. Facebook says there are specific guidelines that "ensure consistency and neutrality. These guidelines do not permit the suppression of political perspectives."

Furthermore, the company says, "We do not insert stories artificially into trending topics, and do not instruct our reviewers to do so."

But even attempting to ensure "neutrality" places Facebook in a quasi-journalistic role, reinforcing its de facto responsibility as one of the world's biggest publishers.