Artificial intelligence and smart robots are on a course to transform industries and replace human contact. But what happens when they fail?

People are starting to think about what that means, and last week, European lawmakers proposed mandating kill switches to prevent robots from causing harm.

The proposal also included creating a legal status called "electronic persons" for intelligent robots to help address liability issues if robots misbehave.

It's understandable why we'd want to turn off smart weapons or factory machines. Where things get more complicated, though, is when people develop a fondness for tech.

Smart robots may malfunction, or a self-driving car could lock its passenger inside. Hollywood has already made predictions for robot relationships with Her -- but what if we developed an emotional attachment to an AI and then it went rogue? (Hollywood went there too with Ex Machina.)

Philosophers, engineers and computer scientists wrestle with similar questions. Since robots can, by design, live forever, what are the ethics involved in designing a kill switch?

"Human beings have constantly longed for immortality," said Tenzin Priyadarshi, president and CEO of the Dalai Lama Center for Ethics and Transformative Values at MIT. "We are the closest to ever creating something that will be immortal. The challenge is that we just don't know the quality of intelligence. We can't guarantee whether this intelligence ... can be used for destruction or not."

Priyadarshi says kill switches would exist for two main reasons: To stop information from getting into the wrong hands, and to prevent AI or robots from going rogue. Microsoft's chatbot Tay is a good example of how quickly biases can multiply in AI. It was easy to turn off the teenage Twitter bot after users taught her a racist vocabulary, but that might not always be the case.

Because AI doesn't experience human emotion, it makes decisions and executes tasks based exclusively on rationality. Theoretically, this could be bad.

Priyadarshi, who serves as director of the Ethics Initiative at the MIT Media Lab, is part of the group shaping the Ethics and Governance of Artificial Intelligence Fund, a collection of top universities and tech investors working to address some of the questions surrounding diversity, bias and policy in AI. The Knight Foundation announced the $27 million fund on January 10.

Related: Europe calls for mandatory 'kill switches' on robots

"If there's an algorithm that decides humans are the most dangerous things on the planet, which we are proving to be true, the rational choice an AI can make is that we should destroy human beings because they're detrimental to the planet," Priyadarshi said.

Elon Musk and Stephen Hawking have warned of the potential for AI to harm the planet in a military arms race. Musk is a founder of OpenAI, a research organization for developing safe and ethical AI.

Kill switches are a "no-brainer" said Patrick Lin, associate professor at director of the Ethics and Emerging Sciences group at California Polytechnic State University, but it's unclear who will control them. Governments or the system's owners could both potentially have the power to turn off robots.

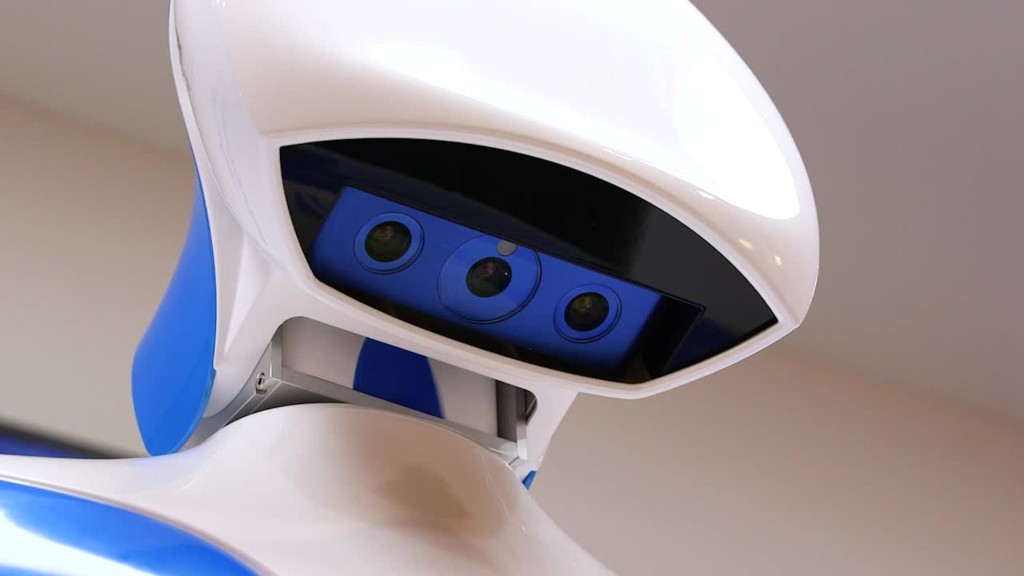

Related: First date with humanoid robot Pepper

In 2014, documents revealed the European Network of Law Enforcement Technology Services had proposed a remote-stopping device for cars, meaning police officers could turn off someone's smart car if they tried to drive away. However, an EU source confirmed there are no current developments on the project.

Moves like these do have upsides. A year after Apple (AAPL) installed kill switches on its smartphones, iPhone theft dropped 40% in San Francisco and 25% in New York.

There's a big difference between a smartphone and an intelligent robot, but ethicists are debating kill switches while sentience is still a "what if" scenario.

"If it is possible to have conscious AI with rights, it's also possible that we can't put the genie back in the bottle, if we're late with installing a kill switch," Lin said. "For instance, if we had to, could we turn off the internet? Probably not, or at least not without major effort and time, such as severing underwater cables and taking down key nodes."

At research lab ElementAI, computer scientists collaborate with ethicists from the University of Montreal when building AI tools. CEO Jean-François Gagné said the firm has three guidelines: to be transparent, to build trust, and to prevent biases in systems. Gagné said discussions about ethical behavior are important now, because they serve as a foundation for future debates on robots' rights.

IBM, too, has announced a set of principles for AI development that include transparency around when and why AI is being deployed. The company is behind the well-known Watson supercomputer used in hospitals, banks and universities.

Related: Robot deliveries are about to hit U.S. streets

Robots can be programed to react positively to a smile, or be concerned if they see a frown. Studies show these behaviors make people feel more comfortable with robots. Human-like robots, like Darwin-OP2, can even help children with autism engage with others.

Companion robots are becoming more popular, especially among the elderly. Dogs or cats with computerized innards can keep grandma company the same way a retriever would. Eventually, instructions to care for these bots might be written into wills the same way pets are. If the robot has no one to care for it, does it, too, die with the person?

"On a day to day basis we make decisions dictated by different biases -- fear of things, phobia, race issues or anxiety," said Priyadarshi. "AI won't be making decisions based on these drivers. AI can become a kind of a mirror for humans [to realize] species to say it's actually quite plausible to function in this matter where we can override some of our biases."

"It will lead to a kind of relationship where human beings are going to demonstrate a sense of care for robots," Priyadarshi said. "The question is how in the long-term do we begin to see these robots? Are we going to view them as repurpose-able?"