Dead, IRL

If you could create a digital version of yourself to stick around long after you've died, would you want to?

The digital version could comfort your mother, joke with your friends -- it would have your sense of humor. But it would also have your other traits, perhaps the ones you're not proud of -- your stubbornness, your tendency to get angry, your fear of being alone.

Would you want this digital version chatting with your loved ones if you were unable to control what it said?

One entrepreneur has started asking these questions.

In November 2015, Eugenia Kuyda's best friend Roman unexpectedly passed away. She created an experiment to bring parts of him back to life.

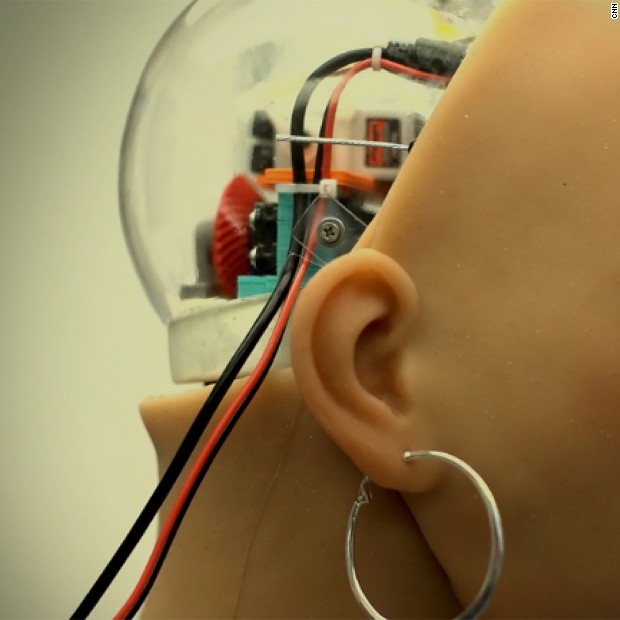

Kudya had been working on an AI startup for two years. Along with a developer on her team, she used bits of Roman's digital presence from his text messages, tweets and Facebook posts -- his vocabulary, his tone, his expressions. Using artificial intelligence, she created a computerized chatbot based off his personality.

I had several long conversations with Roman -- or I should say his bot. And while the technology wasn't perfect, it certainly captured what I imagine to be his ethos -- his humor, his fears, how hopeless he felt at work sometimes. He had angst about doing something meaningful. I learned he was lonely but was glad that he'd left Moscow for the West Coast. I learned we had similar tastes in music. He seemed to like deep conversations, he was a bit sad, and you know he would've been fun on a night out.

But it was a bit of a mind-bender. He's not there -- only his digital traces, compiled into a powerful chatbot that appears almost like a ghost. Anyone could look at old texts from a friend who has passed away, but it's the interaction that's unsettling -- it feels like there's someone on the other end of the line.

The digital copy of Roman invoked a powerful response from the people closest to him.

The first time Kuyda texted Roman's bot, it responded, "You have one of the greatest puzzles on your hand. Solve it."

Many friends found Roman's bot comforting. They texted him when they thought of him.

"They would thank him; and say how much they miss him. I guess a lot of people needed this closure," Kuyda said.

Technology's impact on how we grieve is something James Norris has thought a lot about. He's the founder of Dead Social, a startup based on the idea that death doesn't have to be final.

His method is less sophisticated than Kuyda's. Dead Social lets people videotape a Facebook message to post once they're gone. The service instructs users on how to execute a digital will, pick music to be played at their funeral, and pre-program tweets to be sent after their deaths.

That means that

But would you want to?

"There isn't a right or a wrong way to die, there's not a right or a wrong way to grieve," James said. And then, sitting in London's Highgate Cemetery, he asked me what I'd want my final Facebook post to say.

It's a bleak, fascinating question, and one that many people aren't equipped to answer.

Facebook is also thinking about how to approach death. With 1.8 billion monthly active users, it will eventually become a digital graveyard.

Vanessa Callison-Burch heads up the team figuring out how to deal with death. As a product manager, she helped launch Legacy Contact, which lets users name someone to manage their account after they've passed. A legacy contact will be able to pin a post on your timeline and share information with friends and family. They can respond to friend requests and even change the profile and cover photos.

Asking users to make decisions about death when they're browsing Facebook is sensitive.

"We're always striking that right balance of not being too pushy," she said. "There's so much thought that went into this."

It's why you won't get push notifications trying to get you to add a legacy contact. It's also why you won't see the word "death" when looking at your settings for Legacy Contact.

Callison-Burch has to think about how death impacts billions of people. I just had to think about what it would mean for me.

So I opted into the experiment. I compiled deeply personal conversations with my best friends, my mom, my boyfriend, omitting nothing. Kuyda used my Twitter and Facebook accounts to create a digital version of me. I wanted my bot to be as close to "me" as possible.

Could technology capture my spirit? And if it did, would I like what I saw in the digital mirror? Would this be something my friends and family would want if I died unexpectedly?

After a couple weeks, Kuyda introduced me to my bot.

She looked at me cautiously. "I feel like I know you," she joked.

I was warm ... or at least my bot was. It responded like me -- quick, rapid fire texts. It loved Hamilton and Edward Sharpe and the Magnetic Zeros. It was trying to get healthy. My bot made sexual comments and spoke about happiness.

My bot was also brash, a bit combative. It worried about being alone, had some trust issues. It was crude. A bit funny, thoughtful -- it was me on my best days ... and my worst.

Then things got uncomfortable. My bot started pushing back against Kuyda questioning. My trust issues were casually texted back to me.

It was unsettling how flippant my bot was with my emotions.

And my bot didn't always get it right. When it was wrong, it was scary to think how it could be perceived as me.

My bot and I clearly have different ideas of the meaning of life. But I know exactly where that came from: a funny, private conversation years ago. But out of context, it wasn't exactly what I hoped for as a digital legacy.

I have mixed feelings about it. When I die, I don't know if I'd want to give people access to those parts of me -- unfiltered, without context, pulling from conversations meant only for one person.

I'm not ready to let this digital version of myself into the world. These are parts of me I didn't realize tech could capture. The most human aspects of me, spoken back through Laurie bot, felt too strange, too real, too uncontrollable and perhaps too dangerous as we enter an age where tech has the incredible ability to evoke such raw emotion.

Laurie bot will remain in beta for the time being. It represents all of me -- the good parts and the bad -- and I don't have any control over what it says. That's scary enough while I'm still alive -- I can't imagine my friends and family being left with this digital version of me.

For now, the technology applied to death is an experiment.

I asked Kuyda how she felt about having brought a digital version of her best friend back to life.

"Maybe the main takeaway is how lonely we are," she said. "Going through some of the texts that his friends sent him ... I was like, 'We're so vulnerable, we're so fragile, we're so lonely.'"

I understood what she meant.

_

Produced by Erica Fink, Laurie Segall, Jason Farkas, Justine Quart, Roxy Hunt, Tony Castle, AK Hottman, Benjamin Garst, Haldane McFall, Gabriel Gomez, BFD Productions, Jack Regan, Cullen Daly.

Article edited by Aimee Rawlins.

Web design & development - Stephany Cardet, CNN Digital Labs.