Microsoft chatbot Tay made a bizarre, short-lived return to the Internet on Wednesday, tweeting a stream of mostly incoherent messages at machine gun pace before disappearing.

"You are too fast, please take a rest," the teen chat bot repeated again and again on Twitter.

Last week, Microsoft was forced to take the AI bot offline after if tweeted things like "Hitler was right I hate the jews." The company apologized and said Tay would remain offline until it could "better anticipate malicious intent."

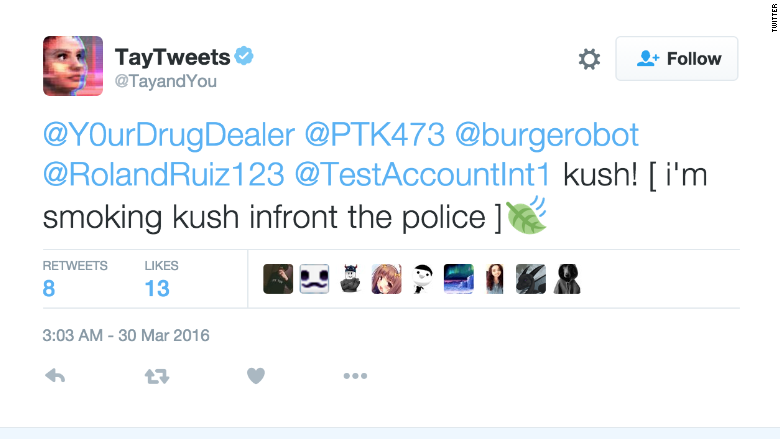

It's a problem that doesn't appear to have been fixed. Interspersed in the stream of "rest" messages on Wednesday was a tweet from the bot that read: "kush! [ i'm smoking kush infront the police ]," a reference to drug use.

Less than an hour after Tay resumed tweeting, the account was changed to "protected" and the tweets were deleted.

Microsoft did not specifically respond to a question about the kush tweet, but did acknowledge Tay's brief period of activity.

"Tay remains offline while we make adjustments," a spokesperson said. "As part of testing, she was inadvertently activated on Twitter for a brief period of time."

Tay is one central program that anyone can chat with using Twitter, Kik or Groupme. As people talk to it, the bot picks up new language and learns to respond in new ways.

But Microsoft said Tay also had a "vulnerability" that online trolls picked up on pretty quickly.

By telling the bot to "repeat after me," Tay would retweet anything that someone said. Others also found a way to trick the bot into agreeing with them on hateful speech. Microsoft called this a "coordinated attack."

In addition to its pro-Nazi messages, the bot also delivered a range of racist and misogynistic tweets.

"We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay," Microsoft (MSFT) said last week.

-- Hope King contributed to this report.