Sometimes a lean is just a lean, and sometimes a lean leads to a kiss. A new deep-learning algorithm can predict the difference.

MIT researchers have trained a set of connected computer systems to understand body language patterns so that it can guess how two people will interact.

The school's Computer Science and Artificial Intelligence Lab made one of its "neural networks" watch 600 hours of shows like "Desperate Housewives" and "The Office."

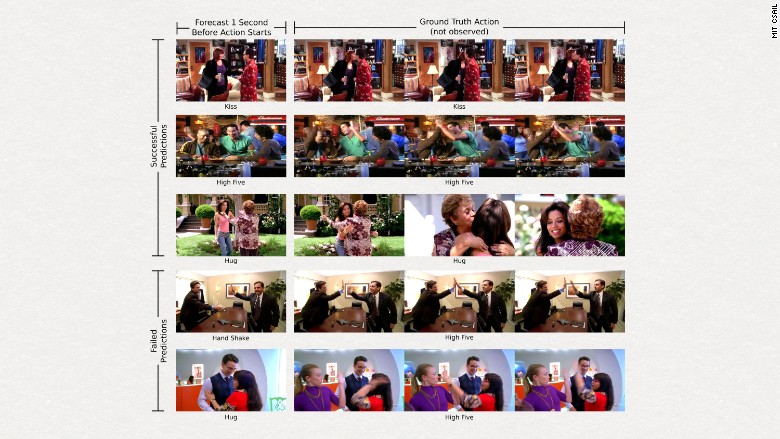

Researchers then gave the algorithm new videos to watch and asked it to predict what people in the videos would do: hug, kiss, high-five or shake hands.

The deep-learning program predicted the correct action more than 43% of the time when the video was paused one second before the real action.

That's far from perfect, but humans only predicted the correct action 71% of the time, according to the researchers.

"We wanted to show that just by watching large amounts of video, computers can gain enough knowledge to consistently make predictions about their surroundings," said Carl Vondrick, a PhD student in computer science and artificial intelligence.

Related: Using artificial intelligence to solve the world's problems

Humans use their experiences to anticipate actions, but computers have a tougher time translating the physical world into data they can process and use.

Vondrick and his team programmed their algorithm to not only study and learn from visual data, but also produce freezeframe-like images to show how a scene might play out.

Even though MIT's algorithms aren't accurate enough yet for real-world application, the study is another example of how technologists are trying to improve artificial intelligence.

Related: Computers will overtake us when they learn to love

Ultimately, MIT's research could help develop robots for emergency response, helping the robot assess a person's actions to determine if they are injured or in danger.

"There's a lot of subtlety to understanding and forecasting human interactions," said Vondrick, whose work was funded in part by research awards from Google. "We hope to be able to work off of this example to be able to soon predict even more complex tasks."

Companies like Google (GOOGL), Facebook (FB) and Microsoft (MSFT) have been working on some form of deep-learning image recognition software in the past few years. Their main objectives at the moment are to help people categorize and organize personal photos. But eventually, as more photos are analyzed and accuracy improves, the systems could be used for to do detailed image captioning for the visually impaired.