Robert Godwin Sr. was walking home from an Easter meal with his family when he was shot by a stranger, who then posted a video of the murder to Facebook.

The gruesome video stayed up for hours on Sunday before it was removed by Facebook (FB). It continues to be shared online.

"We know we need to do better," Justin Osofsky, VP of global operations at Facebook, wrote in a post Monday after the company faced criticism for its handling of the video.

"We disabled the suspect's account within 23 minutes of receiving the first report about the murder video, and two hours after receiving a report of any kind," Osofsky said. Facebook is now in the process of reviewing how videos are flagged by users.

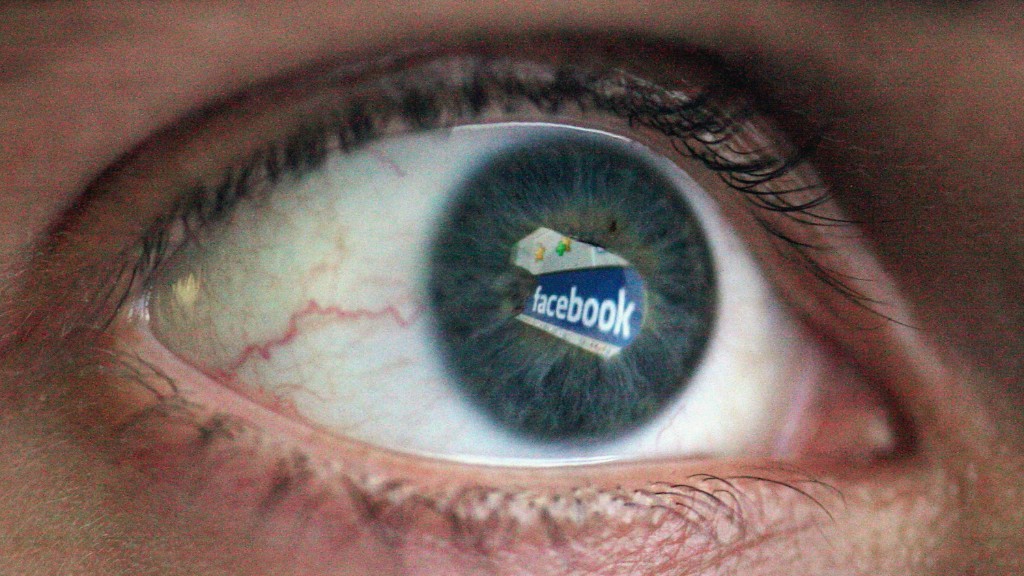

It's just the latest in a growing list of disturbing videos of murder, suicide, torture and beheading published on Facebook, however briefly, either through live broadcasts or video uploads.

The new video is reigniting old questions about how the social network handles offensive content: How many people does Facebook have moderating and flagging this type of content globally? What is the average response time for removing it? And does Facebook save the content for law enforcement after it's deleted?

Facebook, like some of its peers in the tech industry, has traditionally stayed vague on these details, other than pointing to its community standards. Facebook called the shooting "a horrific crime" in an earlier statement Monday.

"We do not allow this kind of content on Facebook," the company said in a statement provided to CNNTech. "We work hard to keep a safe environment on Facebook, and are in touch with law enforcement in emergencies when there are direct threats to physical safety."

A source close to Facebook says it has "thousands" of people reviewing content around the world. Once a piece of content is reported by users as inappropriate, it is typically reviewed "within 24 hours."

Related: Facebook is coming to a TV near you

Facebook relies on a combination of algorithms, "actual employees" and its community of users to flag offensive content, says Stephen Balkam, founder and CEO of Family Online Safety Institute, a longtime member of Facebook's safety advisory board.

"They have reviewers in Asia, they have reviewers in Europe and they have reviewers in North America," Balkam says. But at least some of that is likely to come from more affordable contractors in Southeast Asia, according to Balkam and others.

"It's work that is treated as low status [in Silicon Valley]," says Sarah T. Roberts, an assistant professor at UCLA who studies online content moderation. "It's not the engineering department. It's the ugly and necessary output of these platforms."

Roberts also criticizes the tech companies for placing some of the burden on users. "It's actually the users who are exposed to something that they find disturbing, and then they start that process of review," she says.

In the case of the most recent murder video, nearly two hours passed before users reported it on Facebook, according to the company. Facebook disabled the account behind the video 23 minutes after that.

In a lengthy manifesto about the future of Facebook published in February, CEO Mark Zuckerberg acknowledged "terribly tragic events -- like suicides, some live streamed -- that perhaps could have been prevented if someone had realized what was happening and reported them sooner."

Zuckerberg said Facebook is developing artificial intelligence to better flag content on the site. This system "already generates about one-third of all reports to the team that reviews content," according to Zuckerberg's post.

"It seems like a magic bullet, and it didn't help yesterday, did it?" Roberts says of the bet on AI.

Facebook isn't the only tech company going this route. Google (GOOGL) said earlier this month it is relying on AI to pinpoint content that could be objectionable to advertisers, after a big brand boycott over extremist content on YouTube.

But as Facebook and Google build up video hubs in search of TV ad dollars, even one offensive or extremist video that slips through the cracks of human or AI moderators may be one too many.

"I don't think they understand that the correct answer is zero," says Brian Wieser, an analyst who covers both companies at Pivotal Research Group. "Of course stuff goes wrong and you will make efforts to solve that problem when those problems arrive. But the standard is zero."