Prime Minister Theresa May has called for closer regulation of the internet following a deadly terror attack in London.

At least seven people were killed in a short but violent assault that unfolded late Saturday night in the heart of the capital, the third such attack to hit Britain this year.

May said on Sunday that a new approach to tackling extremism is required, including changes that would deny terrorists and extremist sympathizers digital tools used to communicate and plan attacks.

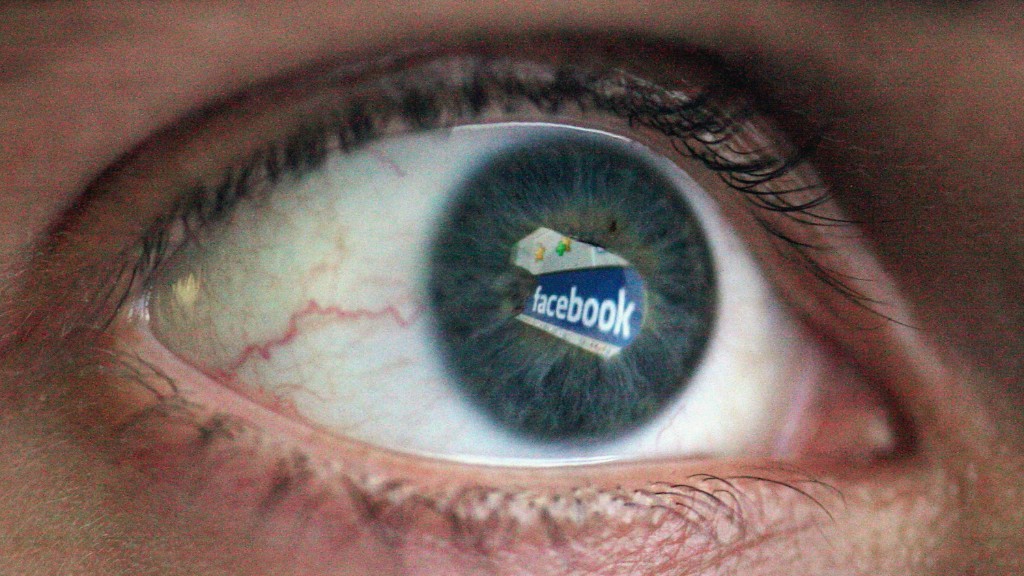

"We cannot allow this ideology the safe space it needs to breed," May said. "Yet that is precisely what the internet and the big companies that provide internet-based services provide."

"We need to work with allied democratic governments to reach international agreements that regulate cyberspace to prevent the spread of extremist and terrorism planning," she continued. "We need to do everything we can at home to reduce the risks of extremism online."

May's call for new internet regulations was part of a larger strategy to combat terror, including what she described as "far too much tolerance of extremism in our country."

It was not immediately clear how May would crack down on social media and internet firms, but she has long been an advocate of increased government surveillance powers.

Not everyone is convinced that additional restrictions would be effective.

Peter Neumann, a professor who studies political violence and radicalization at King's College in London, said that blaming social media is "politically convenient but intellectually lazy."

Neumann said that few people are radicalized exclusively online. And efforts by major social media firms to crack down on extremists accounts have pushed their conversations off public sites and onto encrypted messaging platforms.

"This has not solved problem, just made it different," he said on Twitter.

The attack comes as tech giants come under increased pressure in Europe over their policing of violent and hate speech.

Europe's top regulator released data last week that showed that Twitter (TWTR) has failed to take down a majority of hate speech posts after they had been flagged. Facebook (FB) and YouTube fared better, removing 66% of reported hate speech.

On Sunday, Twitter pointed to data that showed it suspended more than 375,000 accounts in the second half of 2016 for violations related to the promotion of terrorism.

"Terrorist content has no place on Twitter," said Nick Pickles, Twitter's head of public policy in the U.K., in a statement to CNNMoney. Pickles said the company will "never stop working" on the issue.

Google (GOOGL) said that it "shares the government's commitment to ensuring terrorists do not have a voice online" and said it was working with its partners to "to tackle these challenging and complex problems."

Facebook (FB) said in a widely reported statement that it wants to be a "hostile environment for terrorists." Simon Milner, the company's director of policy, added that the social media platform works "aggressively" to remove terrorist content.

Related: 8 minutes of terror and mayhem

In the U.K., a parliamentary committee report published last month alleged that social media firms have prioritized profit over user safety by continuing to host unlawful content. The report also called for "meaningful fines" if the companies do not quickly improve.

"The biggest and richest social media companies are shamefully far from taking sufficient action to tackle illegal and dangerous content," the report said. "Given their immense size, resources and global reach, it is completely irresponsible of them to fail to abide by the law."

Forty-eight people were injured in Saturday's attack on London Bridge and Borough Market. Police officers pursued and shot dead three attackers within eight minutes of the first emergency call, London police said.

-- Ivana Kottasová contributed reporting.