The smallest things we do can give away our biggest secrets.

According to researchers at the University of Padova in Italy, how a person moves a mouse when answering questions on a computer may reveal whether or not they're lying. The finding has the potential to identify everything from fake online reviews and fraudulent insurance claims to pedophiles and terrorists, the team suggests.

The researchers used an artificial intelligence algorithm trained to make decisions based on data. The computer system was presented labeled examples from individuals answering questions honestly and those providing false answers. With experience, the algorithm began to identify the differences in mouse movements between an honest and dishonest answer.

During the study, which involved 60 students at the University of Padova, participants answered a series of questions -- some of which were unexpected. Half were told to assume a false identity and given time to practice it.

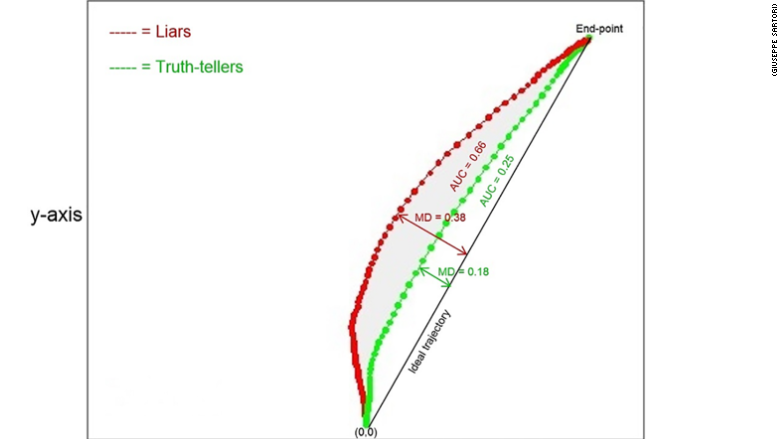

The truthful individuals slid their mouse directly to an answer. The dishonest individual took a longer, indirect path to their answer.

"Our brain is built to respond truthfully. When we lie, we usually suppress the first response and substitute it with a faked response," said Giuseppe Sartori, lead researcher and a University of Padova professor.

The study was published recently in the online journal Plos One.

Sartori envisions the technology helping authorities identify terrorists who are entering European countries under false identities. Sartori's technique does not require a person to know certain information, such as an accurate birthday or address, to determine if they are lying. Instead, authorities could detect lies by the manner in which specific questions were answered.

Related: Data of almost 200 million voters leaked by GOP analytics firm

Sartori said the technology could also be used to identify a pedophile who signed up for an online service with a false age.

A well-coached individual could learn to lie convincingly with quick responses to questions. But they might stumble on tangential questions, such as stating their zodiac sign or a cross street near their home address.

However, there are limitations to the approach -- artificial intelligence is only as good as the data it's trained on. Sartori said more subjects need to be studied to ensure the results accurately reflect all human behavior.

The team's next step is to examine the differences between how honest and dishonest individuals type on a keyboard when answering questions.