The future is arriving even faster than the predictions depicted in "Minority Report," a film long hailed for being prescient.

Steven Spielberg's dystopian film was released 15 years ago this week.

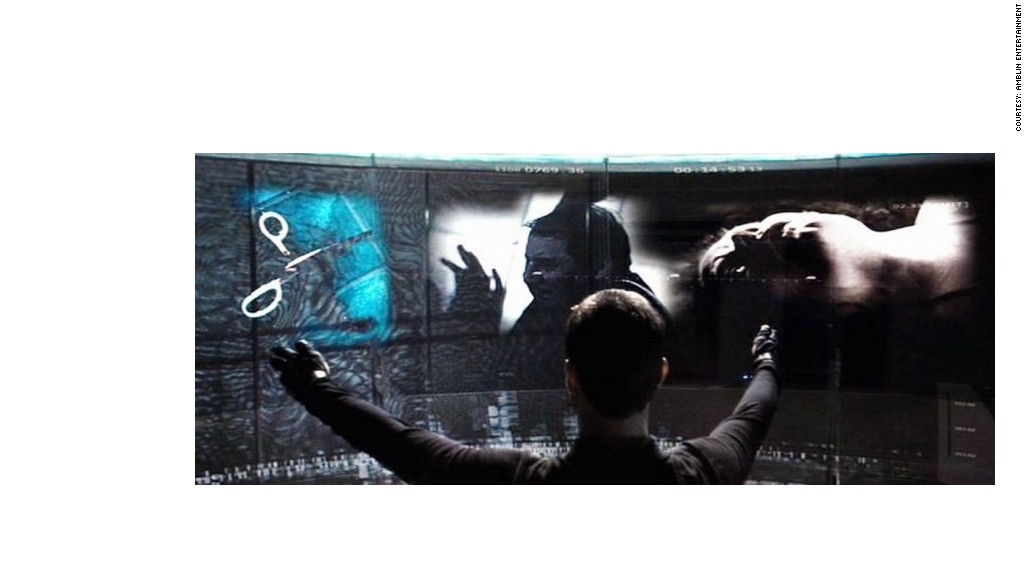

"Minority Report," set in 2054, included self-driving cars, personalized ads, voice automation in the home, robotic insects and gesture controlled computers -- all technology entering our lives in 2017.

But many experts warn one of the technologies highlighted in the film, predictive policing, is used recklessly today.

Although police departments don't race through neighborhoods on jetpacks to disrupt murders -- as they did in the film -- data and artificial intelligence is already used to predict the location of potential crimes and dispatch officers to those areas. A 2016 study from the organization UpTurn found 20 of the nation's 50 largest police forces have used predictive policing.

The film served as a cautionary tale of the dangers of technology, but that isn't its legacy.

"Minority Report has become less of a cautionary tale and more of a blueprint, especially in predictive policing," said Suresh Venkatasubramanian, a computer science professor at the University of Utah.

According to Andrew G. Ferguson -- a professor at the University of District Columbia law school and author of the upcoming book "The Rise of Big Data Policing" -- police departments are drawn to the technology's potential as a quick fix seen as objective and trustworthy.

"Police agencies are adopting this technology because the lure of black box policing is so attractive," Ferguson said. "Every chief has to answer the unanswerable question, 'What are you doing about crime rates?'"

In "Minority Report," the local police department's psychic cyborgs conclude the film's main character, played by Tom Cruise, will commit a murder. While trying to prove his innocence, Cruise learns that the cyborgs lack consistent judgment.

Related: The inevitable rise of the robocops

But that lesson isn't widely understood with today's crime prediction systems, Venkatasubramanian argues.

"We're treating algorithms as if they're infallible and true arbiters of how people are going to behave, when of course they're nothing of the sort," Venkatasubramanian said.

Chicago's Police Department program has used artificial intelligence to identify people at high risk of gun violence, but a 2016 Rand Corporation study found the tactic was ineffective.

"When you have machine learning algorithms making decision, they will often make mistakes," Venkatasubramanian said. "Rather than trying to hide the fact they will make mistakes, you want to be very open and up front about it."

Venkatasubramanian is among a community of researchers concerned about the inaccuracy of algorithm predictions. He points to the problem of how algorithms learn from feedback. For example, if officers spend more time observing a certain neighborhood, its arrest statistics may increase even if the underlying crime remains consistent.

If machines are trained on biased data, they too will become biased. Communities with a history of being heavily policed will be disproportionately affected by predictive policing, research has warned.

Related: Embracing the police force of the future

According to Kristen Thomasen -- a law professor at the University of Windsor, who studies the ethics of artificial intelligence -- the dystopian fears raised in the film should be felt today.

Thomasen points to how artificial intelligence systems have been used to aid judges in determining criminal sentences. In these cases, AI systems rate a criminal's likelihood of committing a future crime. (After ProPublica published a landmark piece on risk assessment scores in May 2016, it ignited a debate among computer scientists on the role of artificial intelligence in policing.)

As of now, the algorithms aren't broadly available for outsiders to effectively analyze whether the systems have biases. Some of the algorithms' creators argue their technology is a trade secret, and revealing it would damage their business.

"That makes it even more challenging to make a case in favor of your innocence or explaining why this prediction is wrong," Thomasen said. "There's a loss of access to justice. It's a fundamental principal of the justice system that you have the ability to defend yourself."

While Minority Report warned us about these problems, 15 years hasn't been enough time for society to absorb it.

"Is Minority Report an anthem for our time, about the use of algorithms in our life? No, not yet," Venkatasubramanian said. "Maybe people will come back in 20 years and say, 'Hey, that was pretty spot on."