Messaging assistants and smart replies are meant to make lives easier by anticipating responses when chatting with friends.

But tools from Google and Facebook sometimes struggle to appropriately insert themselves into conversations.

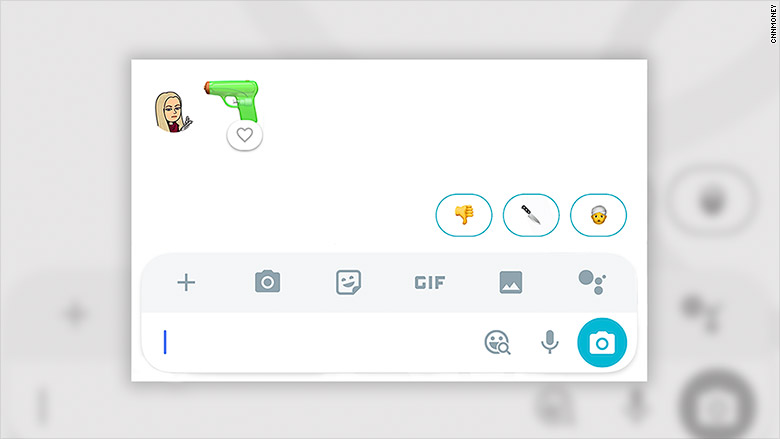

For example, the smart reply feature in Google's Allo messaging app last week suggested I send the "person wearing turban" emoji in response to a message that included a gun emoji.

My CNN Tech colleague was able to replicate the exchange in a separate chat. Google has fixed the response and issued an apology.

"We're deeply sorry that this suggestion appeared in Allo and have immediately taken steps to make sure nobody gets this suggested reply," the company said.

Smart replies are separate from the company's Google Assistant, its Siri and Alexa-like voice activated service. Instead, smart replies are real-time suggested responses based on the conversation you're having.

It's unclear what provoked that suggested emoji response in Google's Allo, but there are a few issues that could be at play. Bots, smart replies, and virtual assistants know what humans teach them -- and because they're programmed by humans, they could contain biases.

Google smart replies are trained and tested internally before they are widely rolled out to apps. Once on our phones, they learn responses based on the individual conversations taking place in the app. You tap on the suggested response to send it. I had never used the emoji before Allo suggested it.

Learning algorithms aren't transparent, so it's hard to tell why exactly smart replies or virtual assistants make certain suggestions.

"When a reply is offensive, we'd like to explain where that came from, but typically can't," said Bertram Malle, co-director of the Humanity-Centered Robotics Initiative at Brown University. "[The Allo exchange] may have occurred because of a built-in bias in its training database or its learning parameters, but it may be one of the many randomly distributed errors that present-day systems make."

Jonathan Zittrain, professor of law and computer science at Harvard University, said the issues around smart replies are reminiscent of offensive automated suggestions that have popped up while conducting Google (GOOG) searches over the years.

There are preventative measures apps can take to avoid offensive responses in messaging, but there's no one-size-fits-all solution.

"[There are] red flag associations you specify up front and try to preempt," Zittrain said. "But what counts as offensive and what doesn't will evolve and differs from one culture to another."

Facebook has a virtual helper in its Messenger app, too: Facebook M. The feature runs in the background of the app and pops up to make suggestions, such as prompting you to order an Uber when it knows you're making plans.

Suggested responses might be useful if you're in a hurry and on your phone -- for example, an automated response could offer to send "See you soon."

But emoji and stickers and may complicate matters by trying to infer sentiment or human emotion.

"A lot of [complication] arises from asking machine learning to do its thing and advise us on not just on shortcuts to obvious answers but on emotions, emoji and more complicated sentiments," Zittrain said.

Facebook (FB) debuted its latest version of M in April, nearly two years after launched the feature as an experiment. Although it began as a "personal digital assistant" and was operated partially by humans, suggestions are now automated. It's available to Facebook Messenger users in a handful of countries.

Google Smart replies and Facebook M are trained and tested internally before they are widely rolled out to messaging apps. Once on our phones, they learn responses based on the conversations taking place in the app. You tap on the suggested response to send it.

I've been using Facebook M for months, and it often recommends responses that don't fit. In one instance, my friend and I were discussing a fiction book that involved exsanguinated corpses. M suggested we make dinner plans.

It also missed the mark on more sensitive conversations. M suggested a vomit sticker following a description of a health issue affecting millions of women each year.

A spokesperson for Facebook said the company is planning to roll out a new way in the coming weeks for people to explicitly say whether M's suggested response was helpful if they dismiss or ignore a suggestion.

Companies have struggled with artificial intelligence in chat before. Apple's predictive autocomplete feature previously suggested only male emoji for executive roles including CEO, COO, and CTO.

Microsoft's first attempt at a social media AI bot named Tay failed when the bot started to learn racist and offensive language taught by the public. The company tried a second time with a bot named Zo, and the company said it implemented safeguards to prevent bad behavior. However, that bot picked up bad habits, too.

Google works to combat bias in machine learning training by looking at data more inclusively, through characteristics including gender, sexual orientation, race, and ethnicity. But as with any big data set, errors can get through to the public.

Flaws in these messaging apps show AI assistant technology is still relatively nascent. If not properly programmed and executed, automated chat services like bots and assistants will be ignored by users.

"It's already very hard to build trust between machines and humans," said Lu Wang, an assistant professor at the College of Computer and Information Science at Northeastern University. "A lot of people are skeptical about what they can do and how well they can serve society. Once you lose that trust, it gets even harder."