The biggest online platforms have unveiled their latest attempt to fight fake news.

Facebook (FB), Google (GOOGL) and Twitter (TWTR) said Thursday they have committed to using new "trust indicators" to help users better vet the reliability of the publications and journalists behind articles that appear in news feeds.

The indicators were developed by the Trust Project, a non-partisan effort operating out of Santa Clara University's Markkula Center for Applied Ethics, to boost transparency and media literacy at a time when misinformation is rampant.

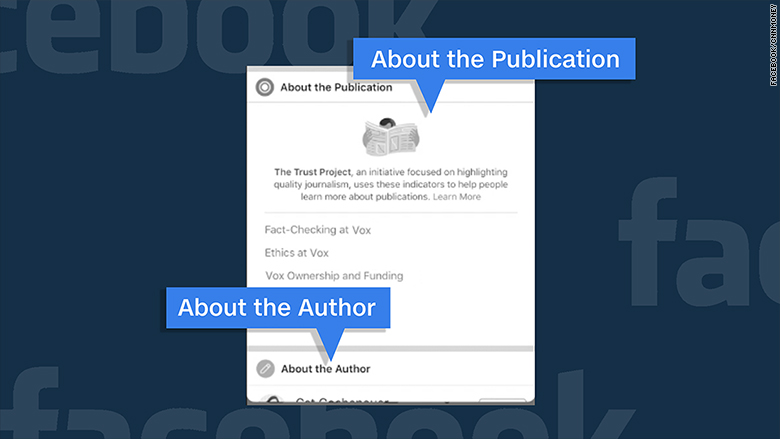

Facebook, which has faced particularly strong criticism about spreading fake news, began testing the indicators on Thursday. Select publishers will have the option to upload additional information about their fact-checking policies, ownership structures, author histories and more.

When you see an article from Vox, for example, Facebook may show an icon you can tap to learn more, including what Vox's ethics policy is and who funds it.

Related: Facebook's global fight against fake news

"This step is part of our larger efforts to combat false news and misinformation on Facebook — providing people with more context to help them make more informed decisions," Andrew Anker, a product manager at Facebook, wrote in a blog post.

Google said it is currently looking into how best to display the indicators next to articles that show up in Google News, Google's search engine and other products. Twitter declined to comment beyond the announcement put out by the Trust Project.

"In today's digitized and socially networked world, it's harder than ever to tell what's accurate reporting, advertising, or even misinformation," Sally Lehrman, the journalist who heads the Trust Project, said in a statement. "The Trust Indicators put tools into people's hands, giving them the means to assess whether news comes from a credible source they can depend on."

Among other details, the indicators will show whether a story is a news report or advertising, highlight other articles the author has published and offer more clarity on the sources used to back up various claims in the story.

The Washington Post, The Economist, The Globe and Mail, and other publications are among the initial group of publishers using the indicators.

Related: Silicon Valley's 'gut-wrenching' year confronting its dark side

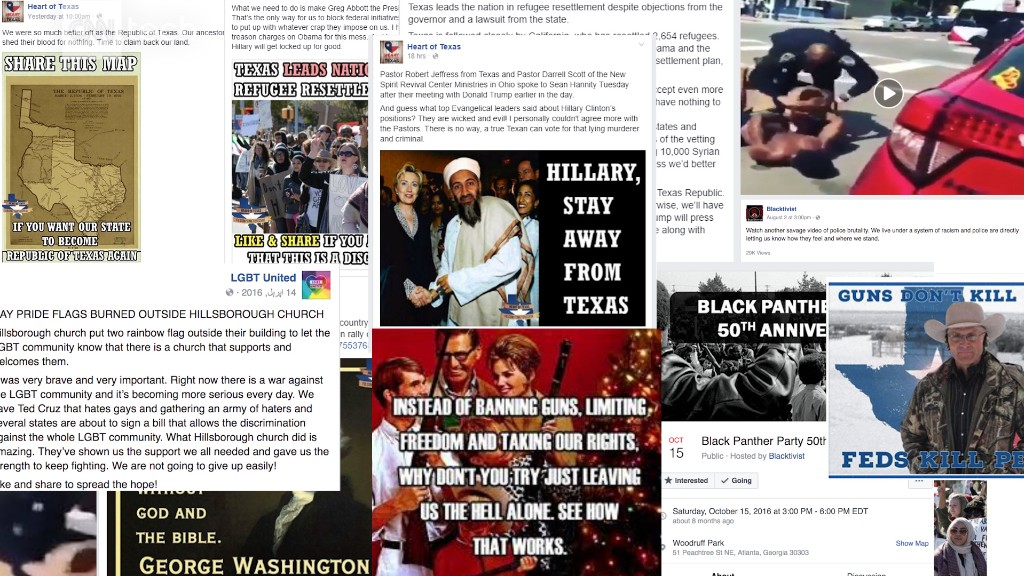

Facebook, Google and Twitter were grilled by Congress earlier this month over how foreign nationals used social media platforms to spread misinformation during the 2016 presidential election.

At the core of this new scrutiny is a question about whether these companies can properly police the content shared on their own platforms, given their massive audiences.

In the year since the election, the tech companies have tried to show they can do better. Facebook and Google have worked with independent fact checking organizations to flag concerning articles. Facebook also introduced related links to provide additional perspectives for stories shared in the News Feed.

Separately, Facebook and Twitter have each announced plans to increase transparency for political advertisements that appear on their sites, as U.S. legislators weigh potential regulation for this issue.