It's Silicon Valley vs. Washington, take two.

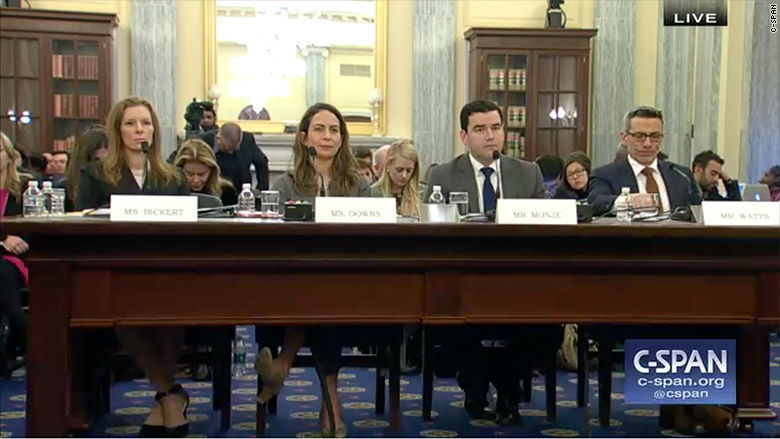

Executives from Facebook (FB), Twitter (TWTR), and Google (GOOGL)'s YouTube defended their attempts to prevent the spread of extremist content on their platforms in a Senate hearing on Wednesday.

The social media firms stressed the increasing effectiveness of artificial intelligence tools to spot posts from terrorists in a Senate Commerce Committee hearing.

Monika Bickert, Facebook's head of policy and counterterrorism, said 99% of terrorism content from ISIS and Al Qaeda is detected and removed before any user reports it thanks to automated tools like photo and video matching.

Juniper Downs, YouTube's director of public policy, noted that 98% of violent extremist videos removed from the service are now identified by algorithms, up from 40% last June.

Some members of the Senate committee expressed skepticism, however. Sen. Bill Nelson, a Democrat representing Florida, described the use of AI tools for "screening out most of the bad guys' stuff" as "encouraging," but "not quite enough."

"These platforms have created a new and stunningly effective way for nefarious actors to attack and to harm," Nelson said.

That sense of concern was amplified by Clint Watts, a senior fellow at Georgetown's Center for Cyber and Homeland Security, who joined the three executives in testifying before the committee.

Related: Silicon Valley's 'gut-wrenching' year confronting its dark side

"Social media companies continue to get beat in part because they rely too heavily on technologists and technical detection to to catch bad actors," Watts said in prepared remarks. AI and other technical solutions "will greatly assist in cleaning up nefarious activity, but will for the near future, fail to detect that which hasn't been seen before."

The Facebook and YouTube execs each noted previously announced plans to recruit thousands of additional workers to review content across their platforms, including extremist content.

Facebook, Google and Twitter were grilled by Congress late last year in a series of hearings on how foreign nationals used social media to meddle in the 2016 election by spreading misinformation and trying to sow discord among voters.

The hearing Wednesday focused on domestic and international terrorism activity on the platforms. But certain senators also resumed questioning the companies regarding Russian propaganda, fake news and political advertising transparency, with an eye toward the midterm elections.

Related: The Kremlin-linked troll Twitter can't seem to shake

Carlos Monje Jr, Twitter's director of public policy and philanthropy, said the company is still working to notify users who were exposed to content from a troll farm with links to the Kremlin.

Monje was also grilled by Sen. Brian Schatz, a Democrat from Hawaii, about Twitter's struggle to crack down on fake accounts, and Monje admitted that the users behind them "keep coming back."

"Based on your results, you're not where you need to be for us to be reassured that you're securing our democracy," said Schatz, a Democrat representing Hawaii. "How can we know that you're going to get this right and before the midterms?"