YouTube Kids has struggled with disturbing videos, like beloved Disney characters in violent or sexual situations, sneaking past its automated filters.

Now the app is adding new settings to give parents more control over what videos their kids can see.

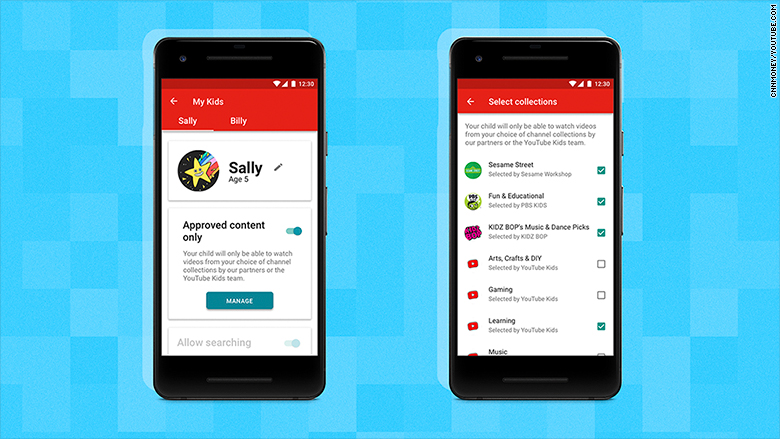

The company announced Wednesday an option to filter videos by "approved content only," so parents can whitelist channels and subjects.

The effort comes four months after reports called attention to troves of videos with inappropriate themes on the video-sharing site's kid-friendly platform. Content for YouTube Kids is selected from the main YouTube app and screened using machine learning algorithms. But some videos, such as cartoons disguised as age appropriate, slip through the cracks.

Once the new setting is turned on, users can pick collections from trusted creators such as PBS and Kidz Bop, or themed collections curated by YouTube Kids itself.

YouTube is launching another tool later this year that will let parents choose every video or channel their kid can see in the app.

Related: Groups claim YouTube illegally collects data from kids

"While no system is perfect, we continue to fine-tune, rigorously test and improve our filters for this more open version of our app. And, as always, we encourage parents to block and flag videos for review that they don't think should be in the YouTube Kids app," the company said in a blog post announcing the new features.

It's the kind of control parents have been asking for from the popular app, but it also puts the onus on them to filter content. It's a side effect of the way YouTube Kids finds its videos.

When a video for children is uploaded to the main YouTube platform, it is not automatically added to the YouTube Kids library. The videos are reviewed by machine learning algorithms to determine whether or not they are appropriate for the app.

Related: Google cracks down on disturbing cartoons on YouTube Kids

The automated process -- one YouTube calls very thorough -- can take days. A human doesn't check the videos before they're added, but parents can flag videos they find alarming later and a content reviewer will check it out.

However, it's unlikely parents are constantly watching YouTube Kids videos along with their children. It's possible this safety guard isn't sufficient for catching every odd video your kid might see.

The flag-the-video-later system has created some problems for the service. Some YouTube creators have uploaded concerning videos marked as kids content that slips past the screening process. These videos have included Spiderman urinating on Frozen's Elsa, Peppa Pig drinking bleach, and Micky Mouse getting run over by a car.

The company continues to change its rules to crack down on the issue, but creators are constantly looking for ways to trick the system.

YouTube Kids still has its main library available by default for now, and the company says it is working on more controls for parents.