Microsoft is trying to avoid another PR disaster with its new AI bot.

The company's latest program can describe what it "sees" in photos.

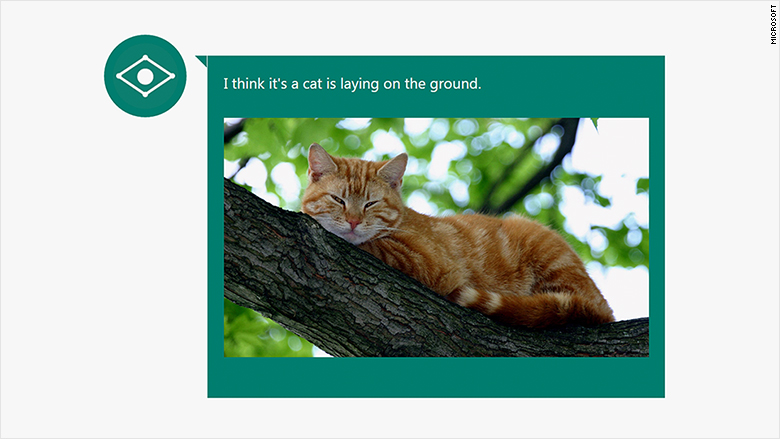

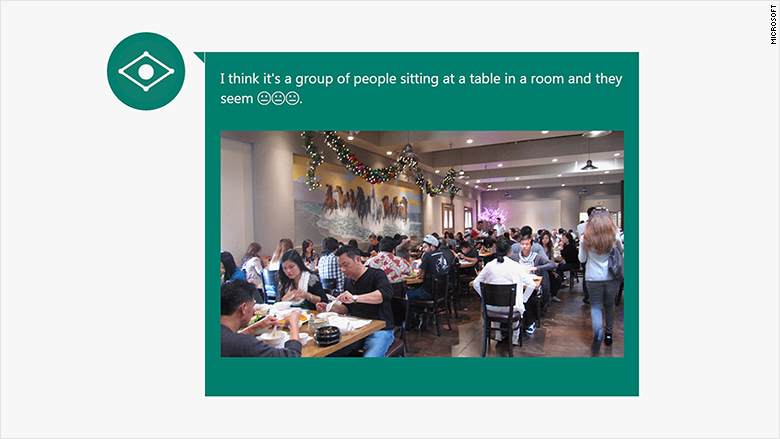

"CaptionBot," as it's called, does a pretty decent job at describing simple everyday scenes, such as a person sitting on a couch, a cat lounging around, or a busy restaurant. But it seems to be programmed to ignore pictures of Nazi symbolism or its leader.

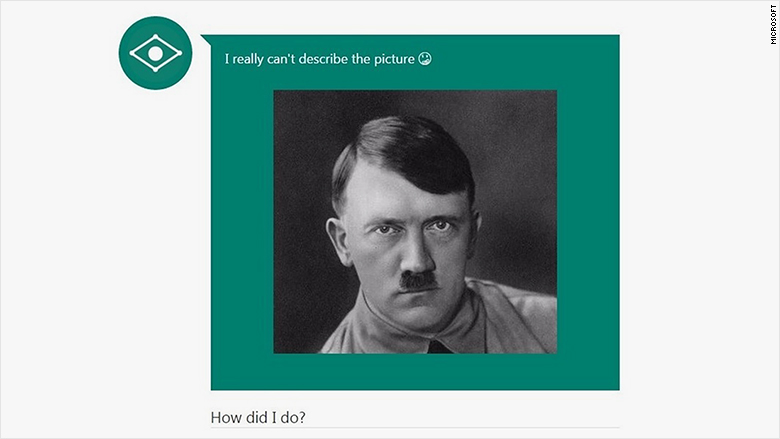

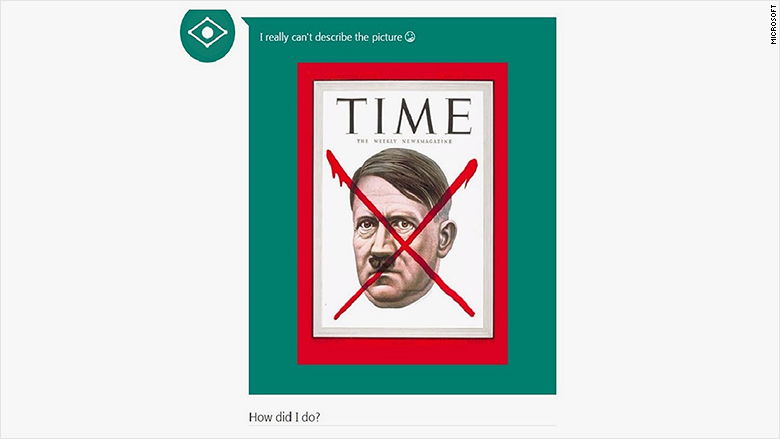

CNNMoney gave CaptionBot several photos of Adolf Hitler and variations of the swastika to analyze, and it often came back with "I really can't describe the picture" and a confused emoji. It did, however, identify other Nazi leaders like Joseph Mengele and Joseph Goebbels.

Microsoft (MSFT) released CaptionBot a few weeks after its disastrous social experiment with Tay, an automated chat program designed to talk like a teen.

Shortly after putting Tay to work on Twitter, it began to tweet incredibly racist comments like "Hitler was right I hate the jews."

The company blamed the bot's behavior on a "coordinated effort" by online trolls to teach and trick the program into saying hateful things, and it took Tay offline after less than a day.

Related: Microsoft 'deeply sorry' for chat bot's racist tweets

In addition to ignoring pictures of Hitler, CaptionBot also seemed to refuse to identify people like Osama bin Laden. But the program had no problems identifying Mao Zedong, Pol Pot, or Saddam Hussein.

"We have implemented some basic filtering in an effort to prevent some abuse scenarios," a Microsoft spokesperson said.

Because bots are driven by AI and machine learning technologies, they evolve over time based on their interactions with humans.

That's why Tay went off the rails and why Microsoft and other tech companies need to be cautious about the rules they place inside their code.

After CaptionBot spits back what it thinks it sees in a photo, it asks for feedback in the form of a five star rating.

The Microsoft Cognitive Services team ingests that feedback along with data from the image to train CaptionBot to become more accurate with its descriptions.

Microsoft could be overcompensating, however. The company's ability to suppress certain kinds of information from being displayed may be the politically correct solution, but Microsoft risks accusations of censorship.

Related: Facebook Messenger becomes a one-stop shop for bots

Bots and AI are the most talked about topics in tech right now. Generally speaking, bots are software programs designed to hold conversations with people about data-driven tasks, such as managing schedules or retrieving data and information.

Facebook (FB) spent a great deal of time talking about its new bot platform on its Messenger app during its developer conference this week. Facebook announced that companies can now build bots to do everything from banking to ordering food or making a restaurant reservation.

Kik, a popular messaging app with teens, also announced the opening of a similar service earlier this month called the "Bot Shop."

Image recognition software has become increasingly important too as people produce and share evermore photos and videos online.

Facebook, Google (GOOGL), and Microsoft are among the tech giants eager to show off their progress in this area. Just think about the way Facebook suggests people to tag in photos, or the way Google Photos catalogs and searches for files. Last summer, Microsoft released "How-Old.net" -- a bot that guesses a person's age based on a photo.